At the SPUD lab we are interested in enabling all technology users to protect their privacy and security from a human-centric perspective. Unfortunately, in the current state of the world users privacy and security are constantly attacked. Whether it by companies who's business model consists entirely of user data exploitation or governments who seek to silence dissidents. There are cases all around the world that point to this reality, though none is most evident than the practices of Facebook. Facebook is the largest social networking site that has ever existed and is in part responsible for atrocities around the world. These atrocities are enabled by their data mining and recommendation systems trained on user data. Users of Facebook are not explicitly signing up to have their every interaction analyzed by a multi-billion dollar corporation. Not only this, but Facebook is able to provide law enforcement with user data without their explicit consent, expanding the state's power to surveille the populace and take action against those they deem a problem (e.g. BLM protestors tracked and arrested through social media). As of now, there is no recourse for users against these privacy intrusions. There has been work on creating "privacy focused" social networks but have failed to attract anyone but the most privacy aware. Put bluntly, normal users just don't care enough to have to start over somewhere else. People are shockingly resilient to bold-faced invasions of their rights. Others believe there is no point in protecting themselves as the cause is already lost.

We think it is possible for users to take back the power companies like Facebook have been given through specialized tools. Stegoshare is one such tool. Stegoshare uses a combination of obfuscation and web3 technologies to subvert existing models of data sharing. Here, we use Facebook's content delivery network to deliver cover images that have a cryptographic hash of the secret image that can be used to retrieve it from the IPFS network.

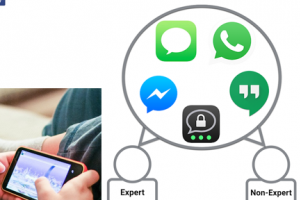

Security and privacy help realize the full potential of computing in society. Without authentication and encryption, for example, few would use digital wallets, social media or even e-mail. The struggle of security and privacy is to realize this potential without imposing too steep a cost. Yet, for the average non-expert, security and privacy are just that: costly, in terms of things like time, attention and social capital. More specifically, security and privacy tools are misaligned with core human drives: a pursuit of pleasure, social acceptance and hope, and a repudiation of pain, social rejection and fear. It is unsurprising, therefore, that for many people, security and privacy tools are begrudgingly tolerated if not altogether subverted. This cannot continue. As computing encompasses more of our lives, we are tasked with making increasingly more security and privacy decisions. Simultaneously, the cost of every breach is swelling. Today, a security breach might compromise sensitive data about our finances and schedules as well as deeply personal data about our health, communications, and interests. Tomorrow, as we enter the era of pervasive smart things, that breach might compromise access to our homes, vehicles and bodies.

We aim to empower end-users with novel security and privacy systems that connect core human drives with desired security outcomes. We do so by creating systems that mitigate pain, social rejection and fear, and that enhance feelings of hope, social acceptance and pleasure. Ultimately, the goal of the SPUD Lab is to design new, more user-friendly systems that encourage better end-user security and privacy behaviors.