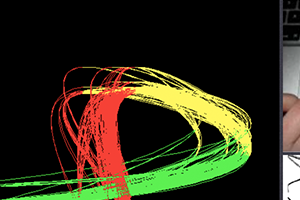

Gestures for interfaces should be short, pleasing, intuitive, and easily recognized by a computer. However, it is a challenge for interface designers to create gestures easily distinguishable from users' normal movements. Our tool MAGIC Summoning addresses this problem. Given a specific platform and task, we gather a large database of unlabeled sensor data captured in the environments in which the system will be used (an "Everyday Gesture Library" or EGL). MAGIC can output synthetic examples of the gesture to train a chosen classifier.

The Contextual Computing Group (CCG) creates wearable and ubiquitous computing technologies using techniques from artificial intelligence (AI) and human-computer interaction (HCI). We focus on giving users superpowers through augmenting their senses, improving learning, and providing intelligent assistants in everyday life. Members' long-term projects have included creating wearable computers (Google Glass), teaching manual skills without attention (Passive Haptic Learning), improving hand sensation after traumatic injury (Passive Haptic Rehabilitation), educational technology for the Deaf community, and communicating with dogs and dolphins through computer interfaces (Animal Computer Interaction).